new

improved

fixed

Release: 4/25/24

NEW

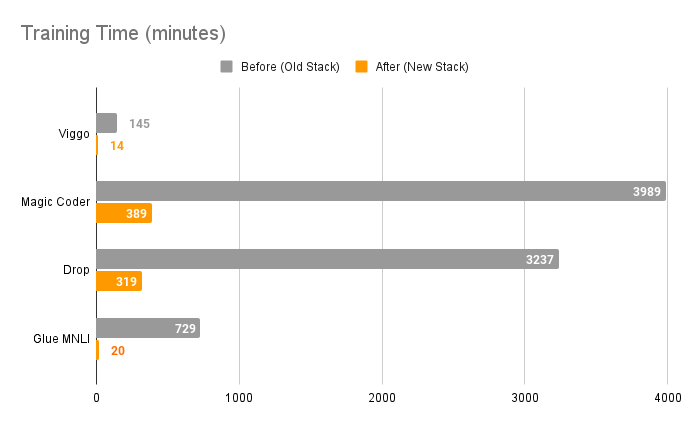

- New fine-tuning stack: Fine-tune on Predibase with up to 10x speedups and improvements in model quality

- Llama-3: All Llama-3 variants are now available for Inference & Fine-Tuning

- Adapters as a first-class citizen: Models have been renamed to Adapters with a new emphasis on adapter-based fine-tuning. Users have the ability to see old model configs as needed.

- New Python SDK:We’re releasing a more consistent and robust SDK for our users to interface with Predibase

IMPROVED

- Docs: Add instruction template section under Fine-tuning > Instruct Formats

- Docs: Added new Quickstart and End-to-End Example

- Training: Allow using model's full context length by default for fine-tuning

- Poll Adapter Status in background while training

FIXED

- Batch size tuning when gradient checkpointing is enabled

- Hide loss column in adapter repos

- Disable mixed-precision training to eliminate OOMs

- Fixed hanging behavior while using generate_stream endpoint